Featured

Table of Contents

- – Why Multi-Device Methodology Influences Succes...

- – Why Artificial Intelligence Transforms Custom ...

- – Assessing Platform Strategies: How Dedicated ...

- – The Role of Personalized Interfaces in Digita...

- – How aitherai.dev Provides to Professional On...

- – When Consistent Enhancement and Assessment A...

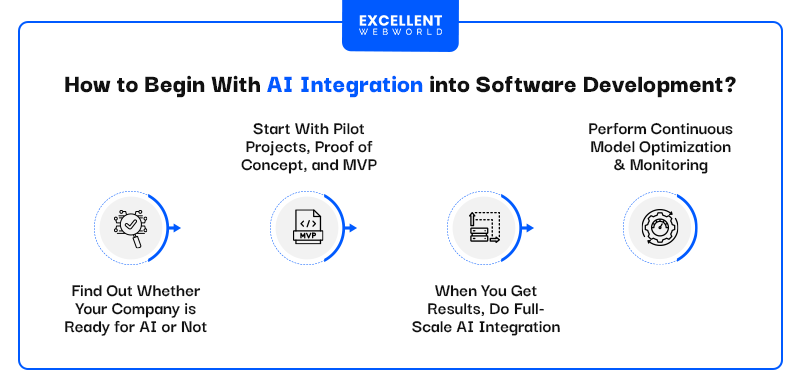

It isn't a marathon that requires research, assessment, and testing to determine the duty of AI in your company and make sure protected, moral, and ROI-driven option deployment. It covers the vital factors to consider, difficulties, and facets of the AI task cycle.

Your goal is to determine its role in your procedures. The easiest means to approach this is by going backward from your goal(s): What do you want to attain with AI execution?

Why Multi-Device Methodology Influences Success Rates

Choose use cases where you've currently seen a convincing demo of the technology's possibility. In the money sector, AI has actually confirmed its advantage for fraudulence detection. Artificial intelligence and deep understanding designs outshine conventional rules-based fraud discovery systems by using a reduced price of incorrect positives and revealing far better results in acknowledging brand-new sorts of scams.

Scientists concur that artificial datasets can boost personal privacy and representation in AI, especially in sensitive markets such as health care or financing. Gartner anticipates that by 2024, as long as 60% of information for AI will be synthetic. All the obtained training data will certainly then have to be pre-cleansed and cataloged. Use constant taxonomy to establish clear information family tree and after that check just how various users and systems make use of the supplied data.

Why Artificial Intelligence Transforms Custom Website Design

In addition, you'll need to split available information right into training, validation, and test datasets to benchmark the established model. Mature AI development teams complete the majority of the information administration refines with information pipelines an automatic sequence of steps for data intake, processing, storage space, and succeeding access by AI designs. Example of information pipe style for information warehousingWith a robust data pipeline style, firms can refine countless information documents in milliseconds in close to real-time.

Amazon's Supply Chain Financing Analytics team, consequently, enhanced its information design workloads with Dremio. With the present setup, the company established brand-new extract transform lots (ETL) work 90% faster, while inquiry rate increased by 10X. This, consequently, made information extra obtainable for thousands of simultaneous users and maker knowing projects.

Assessing Platform Strategies: How Dedicated Digital Partners For example Expert Digital Teams Deliver Results

The training procedure is complicated, too, and susceptible to issues like sample efficiency, security of training, and catastrophic disturbance issues, to name a few. Successful business applications are still couple of and mainly come from Deep Technology companies. are the foundation of generative AI. By using a pre-trained, fine-tuned version, you can rapidly train a new-gen AI algorithm.

Unlike conventional ML frameworks for natural language handling, foundation designs call for smaller labeled datasets as they already have actually embedded knowledge during pre-training. That said, structure versions can still create incorrect and inconsistent results. Specifically when applied to domains or tasks that differ from their training information. Training a structure model from the ground up additionally calls for enormous computational resources.

The Role of Personalized Interfaces in Digital-First Website Creation

occurs when model training problems vary from deployment problems. Properly, the version doesn't generate the preferred lead to the target setting because of differences in criteria or arrangements. happens when the statistical homes of the input data alter with time, influencing the model's efficiency. For instance, if the design dynamically optimizes costs based upon the total variety of orders and conversion prices, however these specifications significantly transform over time, it will no more offer precise suggestions.

Instead, most preserve a data source of design variations and execute interactive model training to progressively enhance the high quality of the final product., and only 11% are successfully deployed to production.

You benchmark the communications to determine the model variation with the highest possible precision. is another vital technique. A design with as well couple of features has a hard time to adjust to variations in the information, while a lot of features can result in overfitting and worse generalization. Very associated features can additionally create overfitting and degrade explainability methods.

How aitherai.dev Provides to Professional Online Solutions

It's additionally the most error-prone one. Only 32% of ML projectsincluding rejuvenating models for existing deploymentstypically get to implementation. Deployment success across different machine learning projectsThe factors for stopped working implementations vary from lack of executive assistance for the job as a result of uncertain ROI to technical problems with ensuring steady model operations under enhanced tons.

The group needed to make sure that the ML version was highly readily available and offered extremely personalized suggestions from the titles available on the customer device and do so for the system's countless customers. To make sure high performance, the team made a decision to program model scoring offline and after that offer the outcomes once the customer logs into their gadget.

When Consistent Enhancement and Assessment Are Necessary for Sustained Results

Eventually, successful AI design implementations steam down to having effective processes. Simply like DevOps concepts of constant assimilation (CI) and continual delivery (CD) boost the deployment of regular software program, MLOps raises the speed, performance, and predictability of AI version implementations.

Table of Contents

- – Why Multi-Device Methodology Influences Succes...

- – Why Artificial Intelligence Transforms Custom ...

- – Assessing Platform Strategies: How Dedicated ...

- – The Role of Personalized Interfaces in Digita...

- – How aitherai.dev Provides to Professional On...

- – When Consistent Enhancement and Assessment A...

Latest Posts

Natural Language Approach for [a:specialty] Providers

The Development of Online Shopping Solutions via Artificial Intelligence

Privacy Factors in [a:specialty] Online Strategy

More

Latest Posts

Natural Language Approach for [a:specialty] Providers

The Development of Online Shopping Solutions via Artificial Intelligence

Privacy Factors in [a:specialty] Online Strategy